Recently I made the decision to put together a Home Lab as I had finally outgrew my single server requirements. It was fine for file storage and basic Plex features, but I really wanted to get into hosting various VM servers for tinkering and learning. The journey will be put up in a later post, but I wanted to share some information that I had learned from the process.

I had a really hard time finding the right info for my needs. I tried various Operating Systems and hardware configurations before I finally found the setup I enjoyed the most. I will state it is constantly changing as I continually research and test new things, so this isn’t final. I have to give credit to the below YouTube channels for the wealth of information they provided me, and the motivation to keep trucking on. Please check them out as I’m sure you will find something that will interest you or help you out on your own journey.

YouTube – Lawrence Technology Services

For hardware I tried a few different options for a Remote Management Client, but finally settled on an Intel NUC 8 w/ Win 10, Dual Core HT 2.2GHz with Turbo 3.2GHz 4GB RAM 1TB HDD $360. It’s a small low-power setup that does exactly what I need it to do, provide a remote-connected client that is secure and capable of managing my systems in one way or another. I chose a locked-down installation of Windows 10, with strict antivirus, antimalware, and firewall rules. It provides everything I need for administering various ESXi and VM hosts.

For Firewall duties, I wanted to go more of an enterprise route, but I knew most of those options were out of my price range. I ended up going with pfSense and have loved what it can do. Don’t get me wrong, the setup process and having to learn on the fly was a very painful process, but well worth it in the end. I could have gone with Netgate’s purpose-built solutions, but I liked the option of making a custom solution even more. I chose to purchase an inexpensive Hyve Zeus to dedicate to this task, for many reasons. First was cost. Although Netgate 1100 pfSense+ Security Gateway $190 was a somewhat reasonable deal, I knew I could make a much more capable and flexible setup on my own. I purchased the Hyve Zeus v1 1U Server 2x Xeon Quad Core E5-2609 2.4GHz 16GB RAM NO HDD – $140, and mirrored two 128gb Transcend SSDs I found for around $37 each. I then Procured a Silicom PEG6I Six-Port Gigabit PCIe Network Card – $100, which rounded out the build. I was actually pleasantly surprised when the Zues server arrived as they upgraded the CPUs to Xeon E5-2640 Six-Core HT @2.5GHz for free, I tell myself it’s because I’m such a great return customer, but I’m pretty sure nobody there cares really. final cost on build was roughly $327 including the cost of the server, SSDs, 6-port NIC, and server rails. A side-note: It is recommended to disable Hyper-Threading as it’s known to introduce network latency. Thankfully that’s an easy change in BIOS.

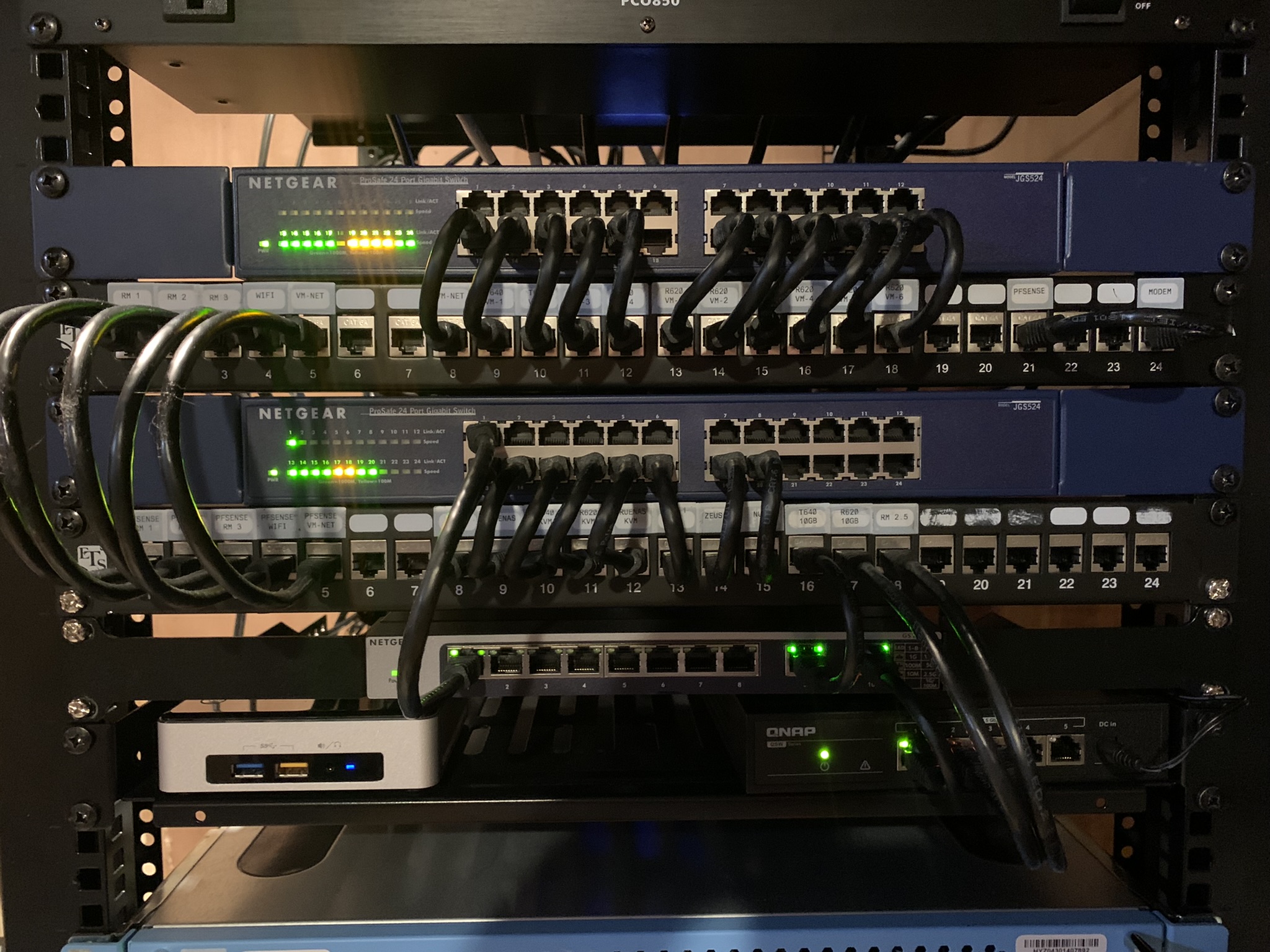

One additional reason I went this route was due to managed gigabit switches still being way to expensive for my tastes. With the 6-port card installed I had a total of 8 usable ports, and I set up 6 DMZs along with the WAN and LAN interface. One of the reasons I love pfSense is due to it’s full fledged features for everything that is networking, and I was able to set up the 6 DMZ ports to provide proper segregation between services. With that flushed out I no longer needed managed switches and saved a bunch towards the lab project, providing each bedroom, game room, WIFI access point, dedicated server, and VM service/test hosts their own separate port and network that functions separately from each other, and each network has it’s own virtual or physical switch.

For VM duties I knew well in advance I was going to use a Hyve Zeus for hosting my gaming servers, running ESXi with a Windows Server 2022 VM. I could have hosted them on my main server, but It’s terrible practice to put all your eggs in one basket. The main server is a Dell PowerEdge T640, with 2x Xeon Silver 4114 Ten-Core HT CPUs @ 2.20GHz, 128GB ram, and a RAID-5 pool of 6x 240GB Intel SSDs giving 1TB usable space. This is a large enclosure that can house 18 3.5″ drives, with gobs of expansion capabilities, but I knew ahead of time that it’s sole purpose in life was to run all my other general use VMs and host all my experimental lab setups. If I had stuck the game servers on there as well, I would be constantly bringing them down for swapping out expansion cards or hard drives as each project has different abilities I want to try, and I do have a dedicated group that plays on the game servers regularly every day. The Hyve Zeus v1 1U Server 2x 10 Core E5-2670v2 2.5GHz 32GB RAM W/ RAILS RISER NO HDD – $247 had plenty of processing power and memory for this task, and was an inexpensive route compared to many similar used options from Dell or the like.

eBay – Netgear ProSafe JGS524 24-Port Gigabit Switch – $40

eBay – Micron 240GB 6G 2.5″ SATA SSD – $59

eBay – Dell Broadcom 5719 Quad-Port Gigabit PCIe Network Card – $28

I also wanted to move storage duties from the Dell T640 into a more dedicated enclosure, providing uptime while I would be tinkering on the main powerhouse. I chose a Chenbro NR12000 1U Xeon E3-1230v2 Quad-Core HT CPU @3.3GHz, 16GB RAM NO HDD – $216 for it’s awesome storage capacity, allowing 12 full size 3.5″ HDD internally, and after many weeks of testing settled on TrueNAS Core to serve my hosting needs. As a stand alone fileserver it’s an amazing package, due to it’s dead-simple ability for setting up safe and secure shares, and utilizing ZFS right from the get-go. It truly is a set and forget setup, just checking in once a month for status and software updates. IT was a very inexpensive option with fantastic features, with an very capable quad-core hyper-threaded processer and 16GB of ram, installed to a ZFS mirror on 240GB SSDs. I did upgrade to 32GB of ram, as ZFS recommends 1GB ram for each TB of storage, and it did indeed make a difference.

I’ve included links to software that was instrumental to getting this project off it’s feet, and what I chose to go with for the final setup.

https://www.pfsense.org/download/ – Recommend installing bare-metal on it’s own hardware, not in a VM. Has native support for Snort and pfBlockerNG, which are both fantastic. I recommend a minimum of a dual-core x86/64 CPU with at least 1-2GB of ram. 20-100GB of storage is plenty, but run dual disks for redundancy with a ZFS mirrored install. If you don’t feel like setting this up yourself, just buy the Netgate 1100 pfSense+ Security Gateway, as it’s already a full device ready to run.

VMware – ESXI Hypervisor – Install bare-metal as it needs direct access to the hardware. Often found in production environments so learning will help out there. Is limited to allowing 8 cores(threads) maximum to each VM, unless you pay for professional license, which is expensive.

Proxmox- Hypervisor – Good free bare-metal OS, but not as polished or supported as ESXi. I don’t believe you will find this in a production environment. Has no limitations on hardware or software for licensing. Extremely easy to setup clustering for failover migrations.

TrueNAS Core – Download – Can be installed either in a VM or bare-metal, but it’s recommended to do bare-metal. One of the easiest and best ways to set up a robust file server. I recommend only using it for that one specific job.

Coady.Tech Docker Guide – This guide is a straightforward approach to getting a perfectly functioning Docker install with the Portainer Web GUI utilizing Photon OS. Credit goes fully to Coady.Tech for the great writeup and image.

https://developers.redhat.com/articles/faqs-no-cost-red-hat-enterprise-linux – Access to use commercial Red Hat Enterprise Linux, the biggest de-facto server/client disto that most others are copied from, all for free.

Ubuntu Desktop – Download – Use this for VM desktop clients, and to test setting up some basic servers etc. If doing serious servers use Red Hat. I use this for a VPN client and a Console client, as ESXi has a webpage to access over the network to directly mess with VMs. I can remote to this from work with Teamviewer, etc.

PoP OS – Debian-based Linux Distro – Another great VM desktop client, but some Ubuntu packages don’t work on it. Still fantastic. This distro is focused around coding and media production, and features full encryption right out of the box.

Debian OS – This is one of the first Linux Distros, and one of the most stable that can run on anything. Highly recommend for VMs to test commands and tinker with.

ServeTheHome.com – Server, Storage, Networking, and Software Reviews – Amazing site for reviews and recommendations about anything regarding servers and the like. Great info for NICs and Server hardware and services.

MVS Collection – Microsoft ISOs – Getting Microsoft Installers for the Operating Systems that are not evaluation versions is extremely difficult, this should help you out. Use these for any Windows VM you want, including Server versions.

Microsoft.com – Generic Volume License Keys (GVLK) – These keys are provided from Microsoft for utilizing KMS Activation.

KMS Activator – Here is a link that has a script to activate Microsoft installs of their Operating Systems, so you can test all their server and desktop versions in a VM and not worry about expiration. It will absolutely set off virus scanners, but is not malicious, Microsoft just wants to block it’s use. I only recommend using this for testing usage or labs, as it will be piracy to use in production environments.

An important thing to remember in regards to VMs is that CPU threads, available RAM, and fast storage are key. A small 1U server with 2x 10-core CPUs and 64GB ram can go a long way and host a dozen VMs. For GUI desktop or server installs, I usually account a minimum of 50GB of storage, but lightweight ones are usually fine with 20GB. I prefer to purchase used SSDs on eBay and run either a Raid-5 or Raid-10 configuration, and prefer ZFS as the filesystem if available. Also note that both ESXi and Proxmox allow overprovisioning or overcommitment. That means that even though you might only have 10 cores, you can assign as many VMs as you want with 4-8 cores and ram, as long as they are configured for it. This is NOT ideal to do if running multiple demanding VMs and they will all start running slowly. You can also setup the storage for VMs as thin disks, which means that even if you say it is 50GB, it scales as it’s actually used so may only be 10GB. Please note that when ESXi and Proxmox refer to CPU cores, they are actually referring to logical, not physical. So if you have an 8-core CPU with hyper-threading that actually gives you 16 cores to assign. There is a security bulletin posted by VMware regarding the sharing of a single physical core between different VMs, and how that could lead to vulnerabilities. This is easily fixed in one of two ways. First is always assign cores in multiples of 2. Core 1 and core 2 are always on the same physical core, as with 3 and 4, 5 and 6, etc. There is also a setting to disable sharing between VMs and just as easy to use.

This should get you started at least, I hope your project lab is as rewarding as mine is!